With the previous article about natural language processing (NLP) and large language models (LLMs) as background, I’d like to walk you through an example of using an LLM to create a basic application that enables a non-specialist to understand the content of long, complex legal documents by asking natural language questions. (I have to give credit to David Shapiro who has a great YouTube channel that provided my initial education in this area.)

As an example document, I will use the guide “Medicare & You 2023“. This is the official government handbook regarding Medicare. It describes the program and various services it does and does not pay for. Because there are frequent changes to Medicare, it is important to get the most up-to-date information. Currently, ChatGPT was trained on information collected from the internet through 2021 and therefore information it might have on Medicare is 2 years old at best. I imagine there are millions of people who might have a simple question about Medicare, but do not want to reference this 128-page document. So building a custom AI system user’s could query makes a good demo project.

Building the Document Index

The first step in building this system is to convert our source text to some sort of vector representation of the document. As discussed earlier, converting the text to vectors allows us to have some semantic meaning of the text represented in a numeric format the computer can search through efficiently. To be a bit more accurately, the computer will be able to place the meaning of words into a vector space for comparison. (James Briggs has some great videos on this.)

To begin the process, I need to convert the large .pdf version of the Medicare guide to plain text. In Python, the PyPDF library makes this super-easy. Once the .pdf is converted to a plain-text .txt document, I need to break that one large block of text into smaller segments or chunks of text to be more workable. Then each chunk of text will be converted into a vector. These are called vector embeddings. Why break the file into chunks? Well, there are two reasons:

First, if we convert the entire document into a single embedding, we’d have a single embedding representing the meaning of the entire document. This would be perfect if we wanted to do a search for this document amongst embeddings of other documents with other meanings. But we want to search within this document for smaller pieces of information it contains. Therefore, we need vector embeddings of all the smaller pieces of information we might want to search for.

Second, we’ll eventually want to send some of the information we find back to our LLM via an API for some language processing. In our case, the API for GPT-3 has a limit on the amount of text we can send in a single API call, so it might be useful now to only deal with chunks of text that are small enough to be fed to API.

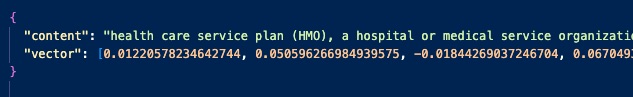

In our case, we will break the overall text into chunks of 4,000 characters. Each of these chunks are fed to OpenAI’s “text-similarity-ada-001” model. What we get back from each text chunk is a vector of 1536 dimensions we’ll call each of these a Content Vector. From this we compose a JSON object that contains our original text chunk and our Content Vector. We will have an array of these JSON objects where each object looks something like this:

For now, I’m saving this array of JSON objects as one large JSON file that will act as a semantic index of the information contained the Medicare guide.

Semantic Similarity Search

The next step is repeat the process with our question. Assuming our questions will be single sentences, we won’t have to break it into a bunch of smaller chunks, so we’ll skip that part. We’ll skip straight to converting the question into a vector embedding. We’ll call this our Query Vector.

Now, to find the most relevant chunks of text in the Medicare guide that relate to our question, we can do a semantic similarity search. By comparing our Query Vector to each of our Content Vectors, the computer can quickly find the Content Vectors that are most similar to our Query Vector using some by getting the dot product of each comparison. We’ll rank those dot product results from most similar to least similar and use top 3 JSON objects with the best dot products. In theory these are the sections of the Medicare guide that contained content most similar to the content of the question.

Answering the Question

So far we’ve only used our language model to create the vector embeddings, but now we’ll use the model in an entirely different way. For each of our three content chunks, we’ll create the following prompt for the GPT-3 model:

Use the following passage to give a detailed answer to the question:

Question: [QUESTION TEXT]

Passage: [RAW CONTENT CHUNK]

Detailed Answer:

GPT-3 Completion Prompt

We’ll dynamically replace the question text and the content text and submit the prompt via the GPT-3 API. GPT-3 will do it’s best to complete the prompt by drafting a concise answer to our question from the text provided. Now it’s possible that our content chunk does not provide sufficient info to answer the question. This is why we took the top three content chunks. This increases the chances that we’re providing the language model enough content to answer the question.

Finally, we get to the last step of this exercise. At this point we should have three answers to our question. Hopefully, these answers are similar and perhaps they answer different aspects of the question or provide difference nuance. To provide a final answer to the user, we’ll combine all three answers into one set text and ask our LLM (GPT-3) to write a detailed summary of that text. That should take care of re-drafting the 3 separate pieces of information into one well-written answer. Now that you understand the architecture of this demo, in the next article I’ll walk you through the results.