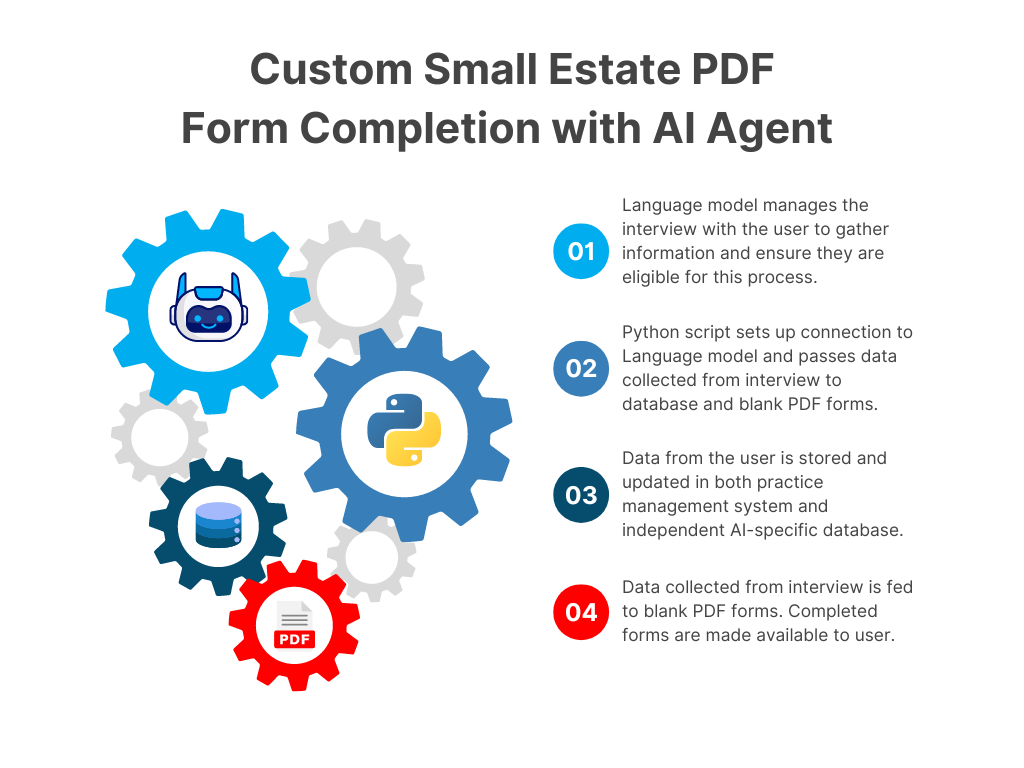

I recently got a call from a friend who had a situation where a family member had unfortunately passed away and there was one relatively small asset they wanted access to. The situation seemed totally appropriate for a simple process for small estates (more accurately Disposition of Estate without Administration §§13000-13606). This wouldn’t actually require hiring a lawyer or opening formal probate proceedings. Since this is a relatively simple form and process, it seemed like a good opportunity to try building a custom AI agent / chatbot that could interview a user and produce the completed PDF form for them.

The “finished” demo application is here. (I say finished because it’s definitely a proof-of-concept that will never be done.) I’m using OpenAI’s Assistant API for creating the agent/assistant to conduct the interview with the user. The prompt itself currently stands at about 750 words divided into several sections including:

- Initial Instructions

- Question Format

- Information to Collect

- Output Format

- Concluding the Interview

- Requirements for Small Estate Process

- …and more!

By far the most beneficial part of prompting and LLM to conduct the interview is that I could include a list of information to collect, along with the instruction “You can collect the information in any order. Ask questions to collect information according to the most natural flow of the conversation in the interview.” Rather than me having to program every contingent direction a conversation could take, I can leave management of the conversation up to the LLM.

One experiment I am testing with this demo is that probate requires some assessment of whether the person filing the form has the appropriate relationship with the deceased to take the property. Rather than translating the logic of intestate succession into if/then statements for analysis I included the relevant code sections as a file separate from the prompt and instructed the Assistant API to use the Retrieval functionality to use those code sections for determining the user’s eligibility to submit the form. This seems to work reasonably well, but needs to be tested more. The danger is that the actual logic of making this determination is happening within the LLM and I can’t be sure it’s not misinterpreting the code or using it’s intrinsic understand of training data to make this determination.

At the end of the user interview I have the assistant pass a JSON representation of the information collected to a separate Python script that populates blank PDF forms with the collected data.

Next Steps

As a proof-of-concept I feel the project is a success – especially since the point was to accelerate my own learning regarding pulling these pieces together. Here are the things I would look to do next:

- Enable the agent to provide more information about the legal process and answer likely questions.

- Look at the possibility of moving from a text-based chatbot to an audio/voice-based user interface.

- Integration with practice management solutions (Clio, MyCase, etc.), automatically creating or updating client records and documents with information from the interview.

- Storing information gathered in a graph database.

- Move the entire thing off OpenAI Assistant API to using a local LLM.

Disclaimer: Nothing in this post is intended as legal advice. I’m merely discussing a coding project. You should consult a lawyer directly regarding your individual situation.